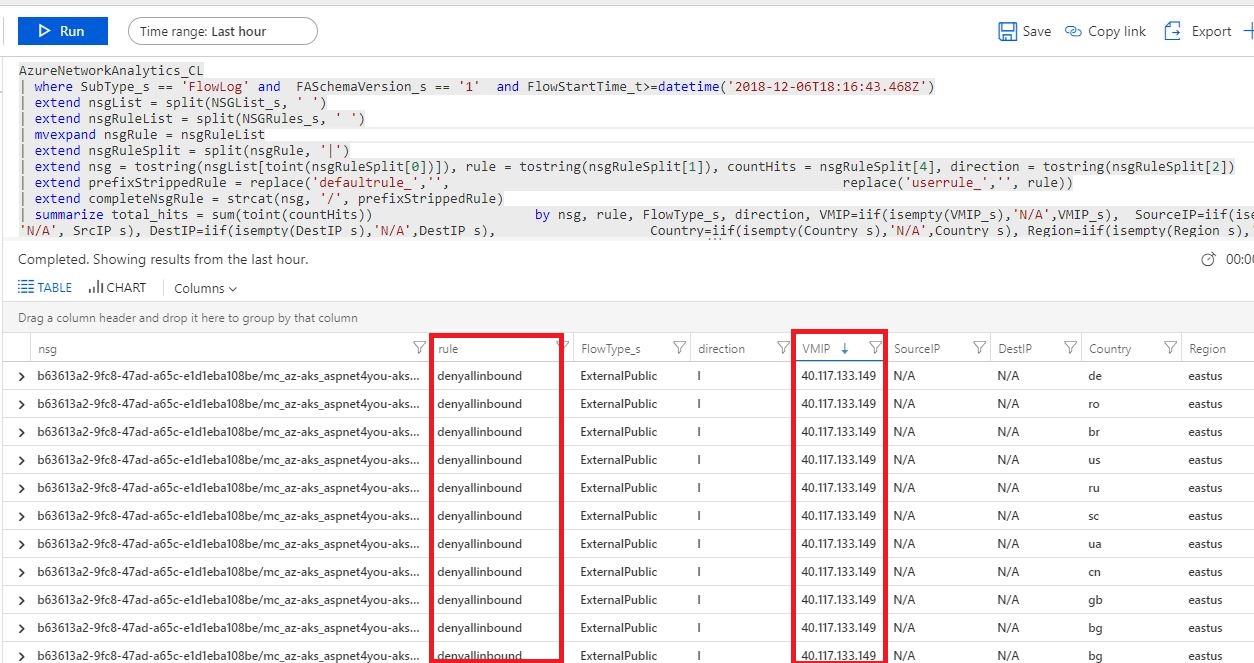

Azure AKS Network Analytics- where are these requests are coming to Kubernetes Cluster?

I am little but puzzled by Azure Network Analytics! Can someone help resolving this mystery?

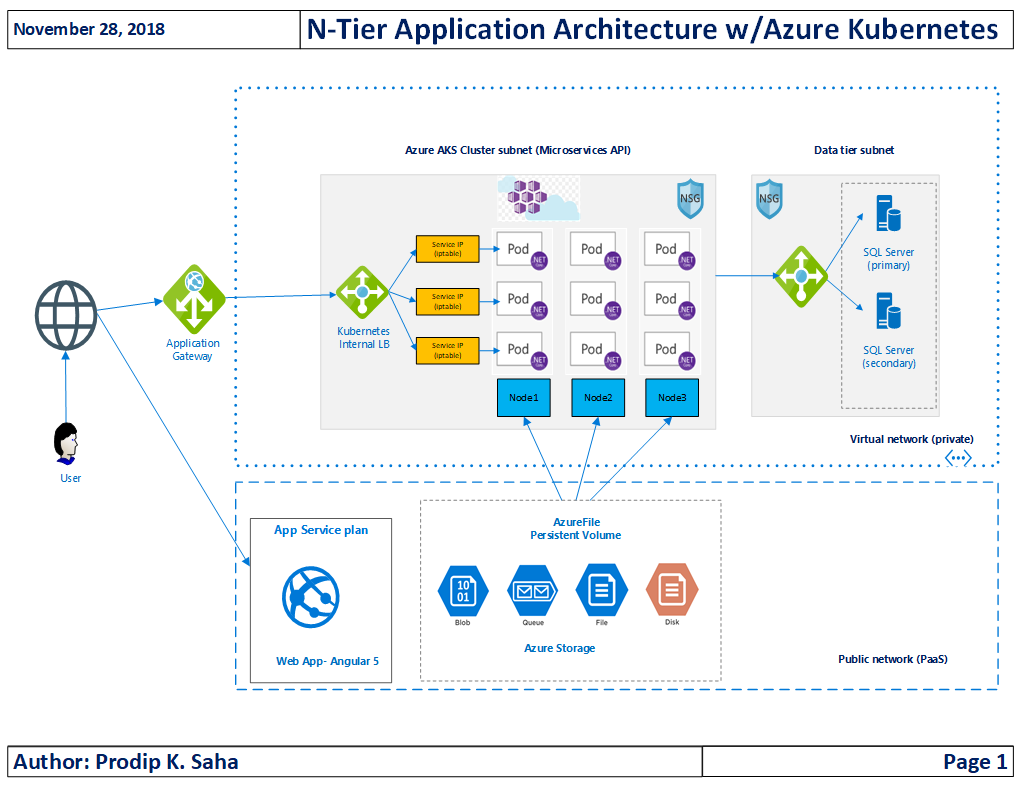

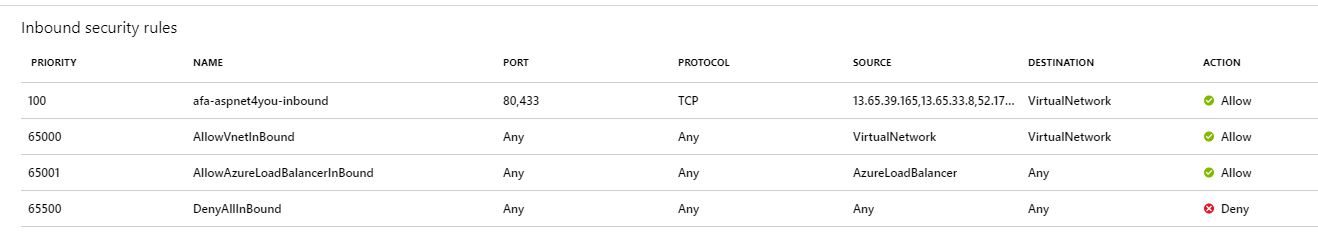

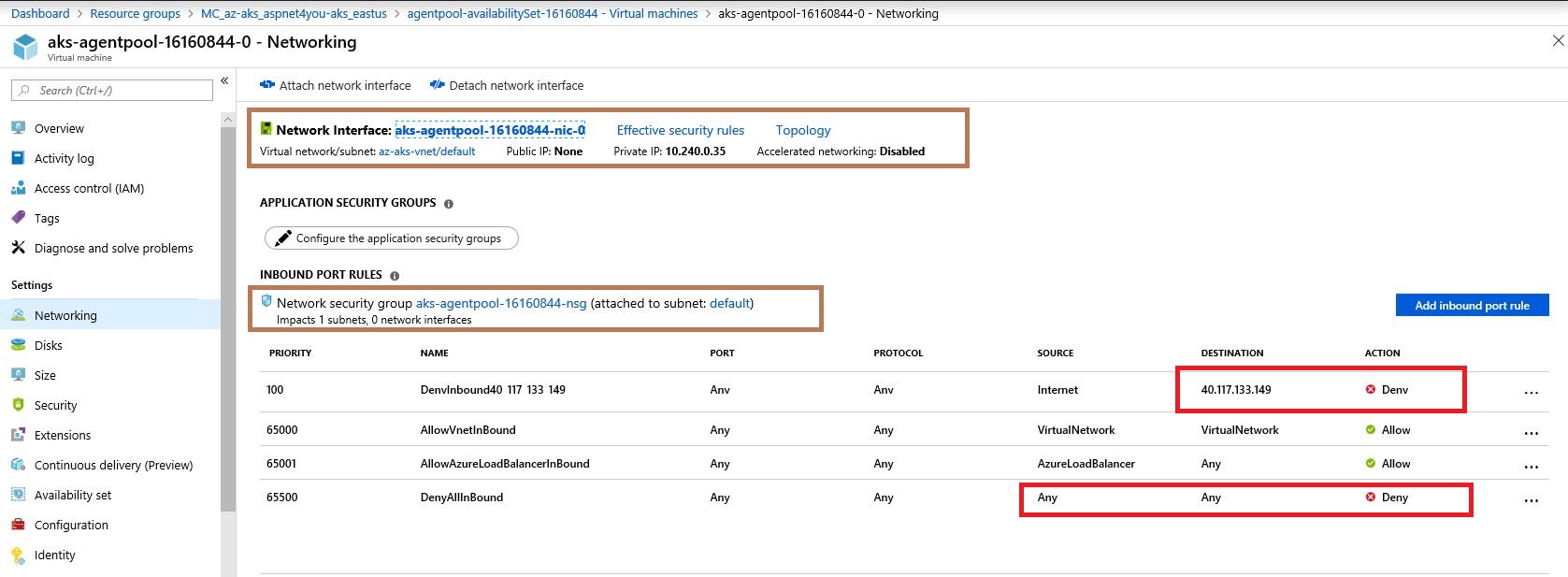

My Kubernetes cluster in Azure is private. It's joined to a vNET and there is no public ip exposed anywhere. Service is configured with internal load balancer. Application gateway calls the internal load balancer. NSG blocks all inbound traffics from internet to app gateway. Only trusted NAT ips are allowed at the NSG.

Question is- I am seeing lot of internet traffic coming to aks on the vNET. They are denied of course! I don't have this public ip 40.117.133.149 anywhere in the subscription. So, how are these requests coming to aks?

You can try calling app gateway from internet and you would not get any response! http://23.100.30.223/api/customer/FindAllCountryProvinceCities?country=United%20States&state=Washington

You would get successful response if you call the Azure Function- https://afa-aspnet4you.azurewebsites.net/api/aks/api/customer/FindAllCountryProvinceCities?country=United%20States&state=Washington

Its possible because of following nsg rules!

Thank you for taking time to answer my query.

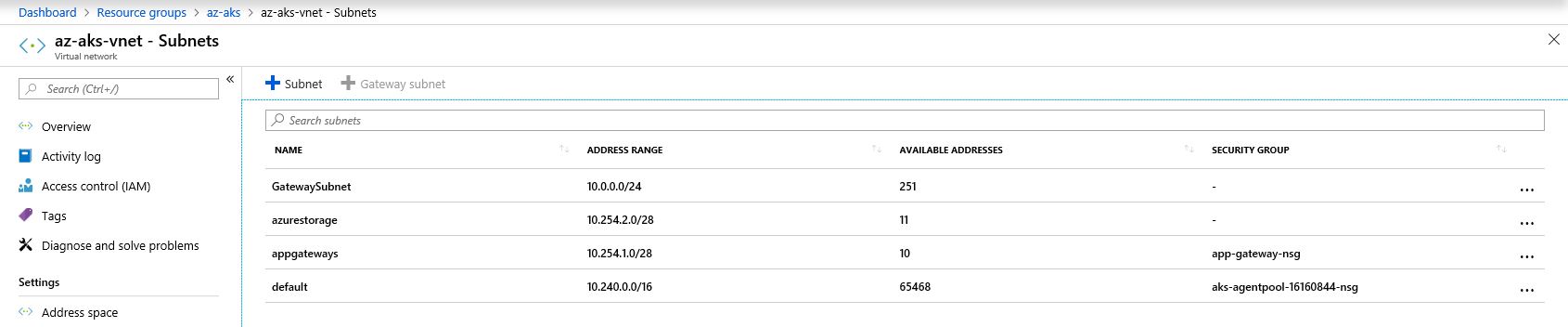

In response to @CharlesXu, I am sharing little more on the aks networking. Aks network is made of few address spaces-

Also, there is no public ip assigned to any of the two nodes in the cluster. Only private ip is assigned to vm node. Here is an example of node-0-

I don't understand why I am seeing inbound requests to 40.117.133.149 within my cluster!

Similar Questions

1 Answer

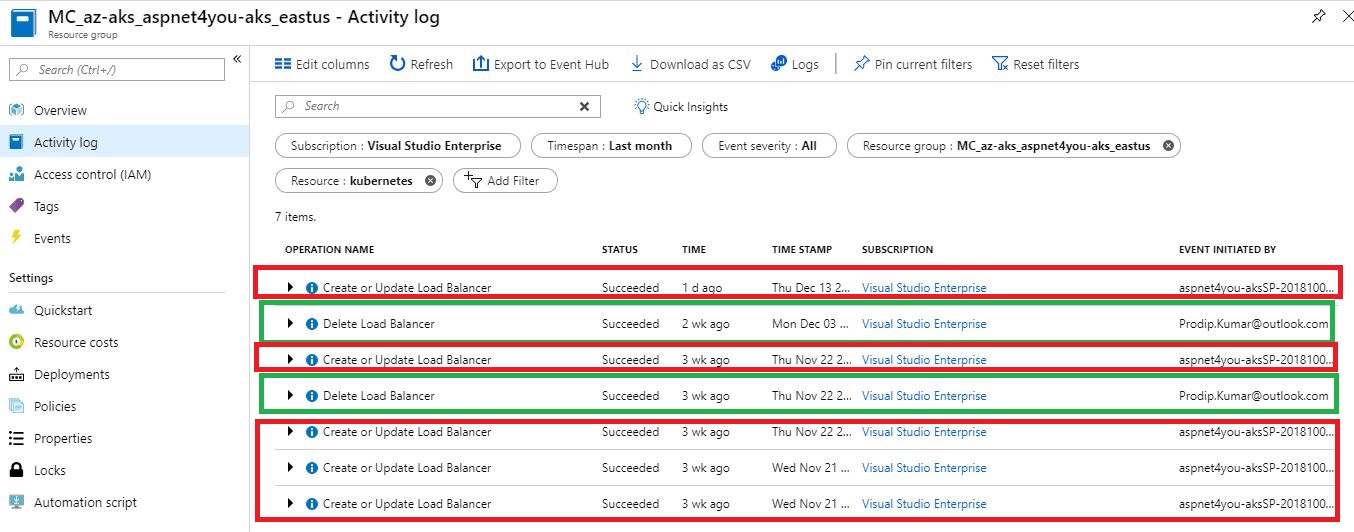

After searching all the settings and activity logs, I finally found answer to the mystery IP! A load balancer with external ip was auto created as part of nginx ingress service when I restarted the VMs. NSG was updated automatically to allow internet traffic to port 80/443. I manually deleted the public load balancer along with IP but the bad actors were still calling the IP with a different ports which are denied by default inbound nsg rule.

To reproduce, I removed the public load balancer again along with public ip. Azure aks recreated once I restarted the VMs in the cluster! It's like cat and mouse game!

I think we can update the ingress service annotation to specify service.beta.kubernetes.io/azure-load-balancer-internal: "true". Don't know why Microsoft decided to auto provision public load balancer in the cluster. It's a risk and Microsoft should correct the behavior by creating internal load balancer.